The function irace implements the Iterated Racing procedure for parameter

tuning. It receives a configuration scenario and a parameter space to be

tuned, and returns the best configurations found, namely, the elite

configurations obtained from the last iterations. As a first step, it checks

the correctness of scenario using checkScenario() and recovers a

previous execution if scenario$recoveryFile is set. A R data file log of

the execution is created in scenario$logFile.

Arguments

- scenario

list()

Data structure containing irace settings. The data structure has to be the one returned by the functiondefaultScenario()orreadScenario().

Value

(data.frame)

A data frame with the set of best algorithm configurations found by irace. The data frame has the following columns:

.ID.: Internal id of the candidate configuration.Parameter names: One column per parameter name inparameters..PARENT.: Internal id of the parent candidate configuration.

Additionally, this function saves an R data file containing an object called

iraceResults. The path of the file is indicated in scenario$logFile.

The iraceResults object is a list with the following structure:

scenarioThe scenario R object containing the irace options used for the execution. See

defaultScenariofor more information. The elementscenario$parameterscontains the parameters R object that describes the target algorithm parameters. SeereadParameters.allConfigurationsThe target algorithm configurations generated by irace. This object is a data frame, each row is a candidate configuration, the first column (

.ID.) indicates the internal identifier of the configuration, the following columns correspond to the parameter values, each column named as the parameter name specified in the parameter object. The final column (.PARENT.) is the identifier of the configuration from which model the actual configuration was sampled.allElitesA list that contains one element per iteration, each element contains the internal identifier of the elite candidate configurations of the corresponding iteration (identifiers correspond to

allConfigurations$.ID.).iterationElitesA vector containing the best candidate configuration internal identifier of each iteration. The best configuration found corresponds to the last one of this vector.

experimentsA matrix with configurations as columns and instances as rows. Column names correspond to the internal identifier of the configuration (

allConfigurations$.ID.).experimen_logA

data.tablewith columnsiteration,instance,configuration,time. This matrix contains the log of all the experiments that irace performs during its execution. The instance column refers to the index of therace_state$instances_logdata frame. Time is saved ONLY when reported by thetargetRunner.softRestartA logical vector that indicates if a soft restart was performed on each iteration. If

FALSE, then no soft restart was performed.stateAn environment that contains the state of irace, the recovery is done using the information contained in this object.

testingA list that contains the testing results. The elements of this list are:

experimentsa matrix with the testing experiments of the selected configurations in the same format as the explained above andseedsa vector with the seeds used to execute each experiment.

Details

The execution of this function is reproducible under some conditions. See the FAQ section in the User Guide.

See also

irace_main()a higher-level interface to

irace().irace_cmdline()a command-line interface to

irace().readScenario()for reading a configuration scenario from a file.

readParameters()read the target algorithm parameters from a file.

defaultScenario()returns the default scenario settings of irace.

checkScenario()to check that the scenario is valid.

Examples

if (FALSE) { # \dontrun{

# In general, there are three steps:

scenario <- readScenario(filename = "scenario.txt")

irace(scenario = scenario)

} # }

#######################################################################

# This example illustrates how to tune the parameters of the simulated

# annealing algorithm (SANN) provided by the optim() function in the

# R base package. The goal in this example is to optimize instances of

# the following family:

# f(x) = lambda * f_rastrigin(x) + (1 - lambda) * f_rosenbrock(x)

# where lambda follows a normal distribution whose mean is 0.9 and

# standard deviation is 0.02. f_rastrigin and f_rosenbrock are the

# well-known Rastrigin and Rosenbrock benchmark functions (taken from

# the cmaes package). In this scenario, different instances are given

# by different values of lambda.

#######################################################################

## First we provide an implementation of the functions to be optimized:

f_rosenbrock <- function (x) {

d <- length(x)

z <- x + 1

hz <- z[1L:(d - 1L)]

tz <- z[2L:d]

sum(100 * (hz^2 - tz)^2 + (hz - 1)^2)

}

f_rastrigin <- function (x) {

sum(x * x - 10 * cos(2 * pi * x) + 10)

}

## We generate 20 instances (in this case, weights):

weights <- rnorm(20, mean = 0.9, sd = 0.02)

## On this set of instances, we are interested in optimizing two

## parameters of the SANN algorithm: tmax and temp. We setup the

## parameter space as follows:

parameters_table <- '

tmax "" i,log (1, 5000)

temp "" r (0, 100)

'

## We use the irace function readParameters to read this table:

parameters <- readParameters(text = parameters_table)

## Next, we define the function that will evaluate each candidate

## configuration on a single instance. For simplicity, we restrict to

## three-dimensional functions and we set the maximum number of

## iterations of SANN to 1000.

target_runner <- function(experiment, scenario)

{

instance <- experiment$instance

configuration <- experiment$configuration

D <- 3

par <- runif(D, min=-1, max=1)

fn <- function(x) {

weight <- instance

return(weight * f_rastrigin(x) + (1 - weight) * f_rosenbrock(x))

}

# For reproducible results, we should use the random seed given by

# experiment$seed to set the random seed of the target algorithm.

res <- withr::with_seed(experiment$seed,

stats::optim(par,fn, method="SANN",

control=list(maxit=1000

, tmax = as.numeric(configuration[["tmax"]])

, temp = as.numeric(configuration[["temp"]])

)))

## This list may also contain:

## - 'time' if irace is called with 'maxTime'

## - 'error' is a string used to report an error

## - 'outputRaw' is a string used to report the raw output of calls to

## an external program or function.

## - 'call' is a string used to report how target_runner called the

## external program or function.

return(list(cost = res$value))

}

## We define a configuration scenario by setting targetRunner to the

## function define above, instances to the first 10 random weights, and

## a maximum budget of 'maxExperiments' calls to targetRunner.

scenario <- list(targetRunner = target_runner,

instances = weights[1:10],

maxExperiments = 500,

# Do not create a logFile

logFile = "",

parameters = parameters)

## We check that the scenario is valid. This will also try to execute

## target_runner.

checkIraceScenario(scenario)

#> # 2026-02-04 08:50:40 UTC: Checking scenario

#> ## irace scenario:

#> scenarioFile = "./scenario.txt"

#> execDir = "/home/runner/work/irace/irace/docs/reference"

#> parameterFile = "/home/runner/work/irace/irace/docs/reference/parameters.txt"

#> parameters = <environment>

#> initConfigurations = NULL

#> configurationsFile = ""

#> logFile = ""

#> recoveryFile = ""

#> instances = c(0.894345891023711, 0.888926012326226, 0.91257964084072, 0.941300497907185, 0.867380211958355, 0.910248538997036, 0.862739770158633, 0.889559749705091, 0.898947961800922, 0.910859926853223)

#> trainInstancesDir = ""

#> trainInstancesFile = ""

#> sampleInstances = TRUE

#> testInstancesDir = ""

#> testInstancesFile = ""

#> testInstances = NULL

#> testNbElites = 1L

#> testIterationElites = FALSE

#> testType = "friedman"

#> firstTest = 5L

#> blockSize = 1L

#> eachTest = 1L

#> targetRunner = function (experiment, scenario) { instance <- experiment$instance configuration <- experiment$configuration D <- 3 par <- runif(D, min = -1, max = 1) fn <- function(x) { weight <- instance return(weight * f_rastrigin(x) + (1 - weight) * f_rosenbrock(x)) } res <- withr::with_seed(experiment$seed, stats::optim(par, fn, method = "SANN", control = list(maxit = 1000, tmax = as.numeric(configuration[["tmax"]]), temp = as.numeric(configuration[["temp"]])))) return(list(cost = res$value))}

#> targetRunnerLauncher = ""

#> targetCmdline = "{configurationID} {instanceID} {seed} {instance} {bound} {targetRunnerArgs}"

#> targetRunnerRetries = 0L

#> targetRunnerTimeout = 0L

#> targetRunnerData = ""

#> targetRunnerParallel = NULL

#> targetEvaluator = NULL

#> deterministic = FALSE

#> maxExperiments = 500L

#> minExperiments = NA_character_

#> maxTime = 0L

#> budgetEstimation = 0.05

#> minMeasurableTime = 0.01

#> parallel = 0L

#> loadBalancing = TRUE

#> mpi = FALSE

#> batchmode = "0"

#> quiet = FALSE

#> debugLevel = 2L

#> seed = NA_character_

#> softRestart = TRUE

#> softRestartThreshold = 1e-04

#> elitist = TRUE

#> elitistNewInstances = 1L

#> elitistLimit = 2L

#> repairConfiguration = NULL

#> capping = FALSE

#> cappingAfterFirstTest = FALSE

#> cappingType = "median"

#> boundType = "candidate"

#> boundMax = NULL

#> boundDigits = 0L

#> boundPar = 1L

#> boundAsTimeout = TRUE

#> postselection = TRUE

#> aclib = FALSE

#> nbIterations = 0L

#> nbExperimentsPerIteration = 0L

#> minNbSurvival = 0L

#> nbConfigurations = 0L

#> mu = 5L

#> confidence = 0.95

#> ## end of irace scenario

#> # 2026-02-04 08:50:40 UTC: Checking target runner.

#> # 2026-02-04 08:50:40 UTC: Executing targetRunner (2 times)...

#> # targetRunner returned:

#> [[1]]

#> [[1]]$cost

#> [1] 0.725405013167655

#>

#>

#> [[2]]

#> [[2]]$cost

#> [1] 17.394187824647

#>

#>

#> # 2026-02-04 08:50:40 UTC: Check successful.

#> [1] TRUE

# \donttest{

## We are now ready to launch irace. We do it by means of the irace

## function. The function will print information about its

## progress. This may require a few minutes, so it is not run by default.

tuned_confs <- irace(scenario = scenario)

#> # 2026-02-04 08:50:40 UTC: Initialization

#> # Elitist race

#> # Elitist new instances: 1

#> # Elitist limit: 2

#> # nbIterations: 3

#> # minNbSurvival: 3

#> # nbParameters: 2

#> # seed: 1278720907

#> # confidence level: 0.95

#> # budget: 500

#> # mu: 5

#> # deterministic: FALSE

#>

#> # 2026-02-04 08:50:40 UTC: Iteration 1 of 3

#> # experimentsUsed: 0

#> # remainingBudget: 500

#> # currentBudget: 166

#> # nbConfigurations: 27

#> # Markers:

#> x No test is performed.

#> c Configurations are discarded only due to capping.

#> - The test is performed and some configurations are discarded.

#> = The test is performed but no configuration is discarded.

#> ! The test is performed and configurations could be discarded but elite configurations are preserved.

#> . Alive configurations were already evaluated on this instance and nothing is discarded.

#> : All alive configurations are elite, but some need to be evaluated on this instance.

#>

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> | | Instance| Alive| Best| Mean best| Exp so far| W time| rho|KenW| Qvar|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> |x| 1| 27| 21| 0.02737696070| 27|00:00:00| NA| NA| NA|

#> |x| 2| 27| 6| 0.3707229146| 54|00:00:00|+0.11|0.56|0.9005|

#> |x| 3| 27| 8| 0.9896523391| 81|00:00:00|+0.21|0.47|0.8358|

#> |x| 4| 27| 3| 2.019881568| 108|00:00:00|+0.21|0.40|0.8017|

#> |-| 5| 17| 15| 1.503182815| 135|00:00:00|-0.05|0.16|0.9819|

#> |=| 6| 17| 21| 1.633193966| 152|00:00:00|-0.02|0.15|0.9667|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> Best-so-far configuration: 21 mean value: 1.633193966

#> Description of the best-so-far configuration:

#> .ID. tmax temp .PARENT.

#> 21 21 12 30.9332 NA

#>

#> # 2026-02-04 08:50:41 UTC: Elite configurations (first number is the configuration ID; listed from best to worst according to the sum of ranks):

#> tmax temp

#> 21 12 30.9332

#> 1 28 40.3082

#> 3 3 65.3082

#> # 2026-02-04 08:50:41 UTC: Iteration 2 of 3

#> # experimentsUsed: 152

#> # remainingBudget: 348

#> # currentBudget: 174

#> # nbConfigurations: 27

#> # Markers:

#> x No test is performed.

#> c Configurations are discarded only due to capping.

#> - The test is performed and some configurations are discarded.

#> = The test is performed but no configuration is discarded.

#> ! The test is performed and configurations could be discarded but elite configurations are preserved.

#> . Alive configurations were already evaluated on this instance and nothing is discarded.

#> : All alive configurations are elite, but some need to be evaluated on this instance.

#>

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> | | Instance| Alive| Best| Mean best| Exp so far| W time| rho|KenW| Qvar|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> |x| 7| 27| 50| 0.02716132869| 27|00:00:00| NA| NA| NA|

#> |x| 6| 27| 29| 0.1116707068| 51|00:00:00|+0.09|0.54|1.1419|

#> |x| 5| 27| 29| 0.6516258709| 75|00:00:00|-0.11|0.26|1.0862|

#> |x| 4| 27| 29| 0.5837232852| 99|00:00:00|-0.08|0.19|0.9976|

#> |=| 1| 27| 29| 1.058291352| 123|00:00:00|-0.00|0.20|0.9397|

#> |=| 3| 27| 29| 0.8858098623| 147|00:00:00|+0.01|0.17|0.9632|

#> |=| 2| 27| 29| 0.7701989144| 171|00:00:00|+0.01|0.15|0.9736|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> Best-so-far configuration: 29 mean value: 0.7701989144

#> Description of the best-so-far configuration:

#> .ID. tmax temp .PARENT.

#> 29 29 5 22.7613 21

#>

#> # 2026-02-04 08:50:42 UTC: Elite configurations (first number is the configuration ID; listed from best to worst according to the sum of ranks):

#> tmax temp

#> 29 5 22.7613

#> 39 3 62.3892

#> 21 12 30.9332

#> # 2026-02-04 08:50:42 UTC: Iteration 3 of 3

#> # experimentsUsed: 323

#> # remainingBudget: 177

#> # currentBudget: 177

#> # nbConfigurations: 24

#> # Markers:

#> x No test is performed.

#> c Configurations are discarded only due to capping.

#> - The test is performed and some configurations are discarded.

#> = The test is performed but no configuration is discarded.

#> ! The test is performed and configurations could be discarded but elite configurations are preserved.

#> . Alive configurations were already evaluated on this instance and nothing is discarded.

#> : All alive configurations are elite, but some need to be evaluated on this instance.

#>

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> | | Instance| Alive| Best| Mean best| Exp so far| W time| rho|KenW| Qvar|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> |x| 8| 24| 61| 0.02845033566| 24|00:00:00| NA| NA| NA|

#> |x| 1| 24| 70| 0.06101645944| 45|00:00:00|+0.16|0.58|0.8968|

#> |x| 6| 24| 70| 0.1401384474| 66|00:00:00|+0.09|0.40|0.9089|

#> |x| 5| 24| 21| 0.2339498155| 87|00:00:00|+0.10|0.32|0.9357|

#> |=| 7| 24| 70| 0.8009782052| 108|00:00:00|+0.07|0.26|0.9730|

#> |=| 4| 24| 70| 0.6842251336| 129|00:00:00|+0.02|0.19|1.0083|

#> |=| 3| 24| 70| 0.6494138345| 150|00:00:00|+0.03|0.17|1.0154|

#> |=| 2| 24| 29| 0.6832859696| 171|00:00:00|+0.05|0.17|0.9761|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> Best-so-far configuration: 29 mean value: 0.6832859696

#> Description of the best-so-far configuration:

#> .ID. tmax temp .PARENT.

#> 29 29 5 22.7613 21

#>

#> # 2026-02-04 08:50:43 UTC: Elite configurations (first number is the configuration ID; listed from best to worst according to the sum of ranks):

#> tmax temp

#> 29 5 22.7613

#> 70 6 22.0074

#> 71 12 35.5064

#> # 2026-02-04 08:50:43 UTC: Stopped because there is not enough budget left to race more than the minimum (3).

#> # You may either increase the budget or set 'minNbSurvival' to a lower value.

#> # Iteration: 4

#> # nbIterations: 4

#> # experimentsUsed: 494

#> # timeUsed: 0

#> # remainingBudget: 6

#> # currentBudget: 6

#> # number of elites: 3

#> # nbConfigurations: 3

#> # Total CPU user time: 3.534, CPU sys time: 0.006, Wall-clock time: 3.541

#> # 2026-02-04 08:50:43 UTC: Starting post-selection:

#> # Configurations selected: 29, 70, 71, 39, 21, 52.

#> # Pending instances: 0, 0, 0, 0, 0, 0.

#> # 2026-02-04 08:50:43 UTC: seed: 1278720907

#> # Configurations: 6

#> # Available experiments: 6

#> # minSurvival: 1

#> # Markers:

#> x No test is performed.

#> c Configurations are discarded only due to capping.

#> - The test is performed and some configurations are discarded.

#> = The test is performed but no configuration is discarded.

#> ! The test is performed and configurations could be discarded but elite configurations are preserved.

#> . Alive configurations were already evaluated on this instance and nothing is discarded.

#> : All alive configurations are elite, but some need to be evaluated on this instance.

#>

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> | | Instance| Alive| Best| Mean best| Exp so far| W time| rho|KenW| Qvar|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> |.| 3| 6| 29| 0.02340241215| 0|00:00:00| NA| NA| NA|

#> |.| 1| 6| 29| 1.489983016| 0|00:00:00|-0.60|0.20|1.1563|

#> |.| 8| 6| 29| 1.018287130| 0|00:00:00|+0.05|0.37|0.7853|

#> |.| 6| 6| 29| 0.8094184284| 0|00:00:00|+0.01|0.26|0.8946|

#> |.| 7| 6| 29| 0.6556405605| 0|00:00:00|+0.15|0.32|0.8287|

#> |.| 4| 6| 29| 0.6097030552| 0|00:00:00|+0.19|0.33|0.8412|

#> |.| 5| 6| 29| 0.7699649329| 0|00:00:00|+0.11|0.23|0.8135|

#> |.| 2| 6| 29| 0.6832859696| 0|00:00:00|+0.18|0.29|0.7575|

#> |=| 9| 6| 29| 2.664461386| 6|00:00:00|+0.07|0.17|0.8242|

#> +-+-----------+-----------+-----------+----------------+-----------+--------+-----+----+------+

#> Best-so-far configuration: 29 mean value: 2.664461386

#> Description of the best-so-far configuration:

#> .ID. tmax temp .PARENT.

#> 29 29 5 22.7613 21

#>

#> # 2026-02-04 08:50:44 UTC: Elite configurations (first number is the configuration ID; listed from best to worst according to the sum of ranks):

#> tmax temp

#> 29 5 22.7613

#> 70 6 22.0074

#> 39 3 62.3892

#> # Total CPU user time: 3.601, CPU sys time: 0.008, Wall-clock time: 3.61

## We can print the best configurations found by irace as follows:

configurations_print(tuned_confs)

#> tmax temp

#> 29 5 22.7613

#> 70 6 22.0074

#> 39 3 62.3892

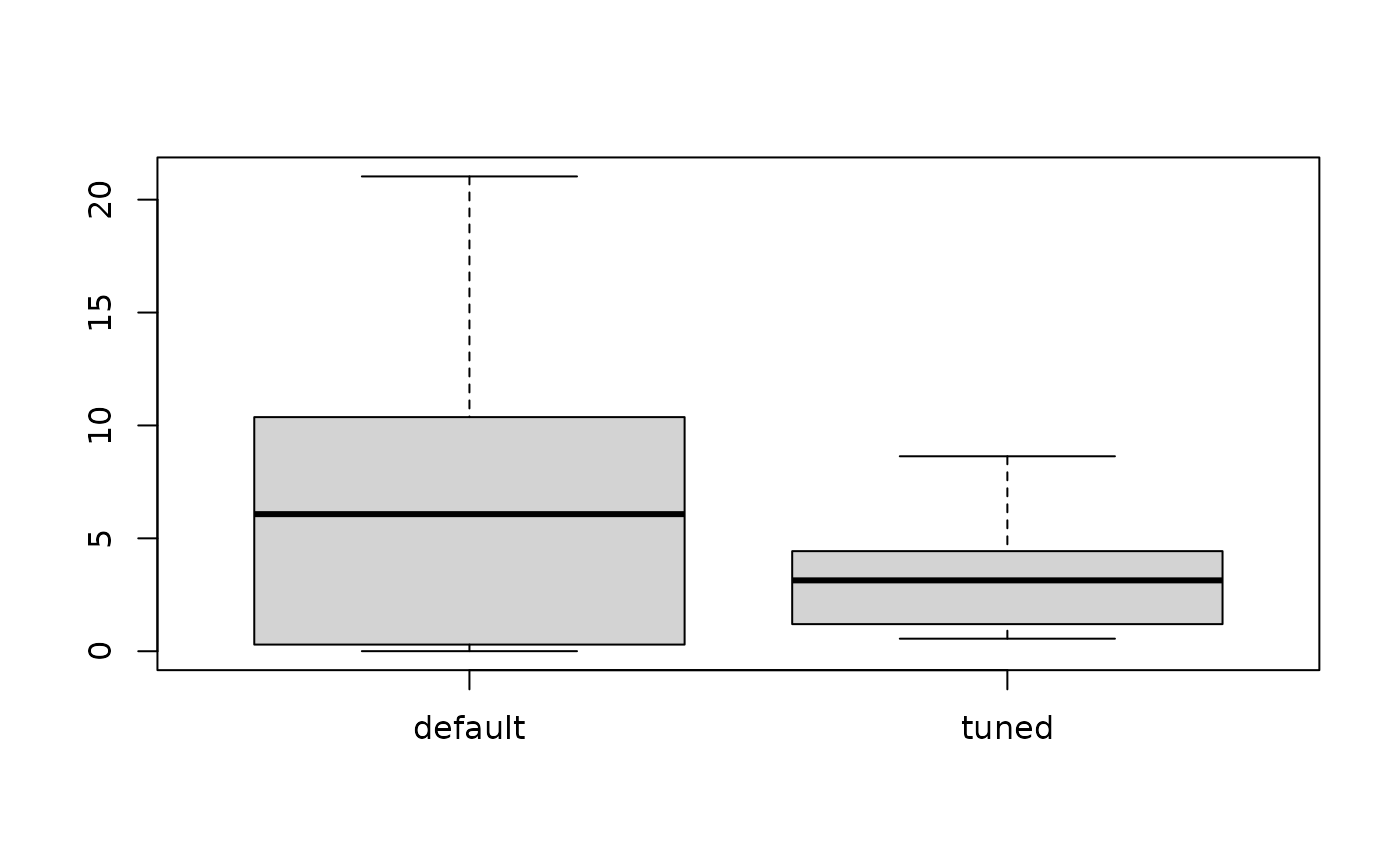

## We can evaluate the quality of the best configuration found by

## irace versus the default configuration of the SANN algorithm on

## the other 10 instances previously generated.

test_index <- 11:20

test_seeds <- sample.int(2147483647L, size = length(test_index), replace = TRUE)

test <- function(configuration)

{

res <- lapply(seq_along(test_index),

function(x) target_runner(

experiment = list(instance = weights[test_index[x]],

seed = test_seeds[x],

configuration = configuration),

scenario = scenario))

return (sapply(res, getElement, name = "cost"))

}

## To do so, first we apply the default configuration of the SANN

## algorithm to these instances:

default <- test(data.frame(tmax=10, temp=10))

## We extract and apply the winning configuration found by irace

## to these instances:

tuned <- test(removeConfigurationsMetaData(tuned_confs[1,]))

## Finally, we can compare using a boxplot the quality obtained with the

## default parametrization of SANN and the quality obtained with the

## best configuration found by irace.

boxplot(list(default = default, tuned = tuned))

# }

# }